Enabling event-driven innovation by cataloguing topic characteristics

As a consumer of events, what do you really need to know to ensure you can use an event topic successfully in a solution? In this article, we’re going to propose what those critical “topic characteristics” might be.

I wanted to make the introduction to this article as short as possible so it could focus on the characteristics, but if you’d like a little more explanation of the “why”, take a look at my introductory post on LinkedIn.

There are many situations where a synchronous interface such as a REST API, simply isn’t the right interaction pattern for a solution, and events will serve it much better. We are beginning to see companies exposing event topics alongside their APIs as part of a “data product”. The event form factor is valuable, and we know we will see greater innovation if we make data accessible in multiple forms. However, it turns out that there’s quite a lot we need to know about an event topic to be sure it will suit our purpose.

The most obvious characteristics revolve around event content: schema, wire format etc., but that’s really not detailed enough. Let’s explore some additional things that might be important for your solution.

- Can I be sure that the schema of the events is enforced?

- How are changes to the schema handled?

- Do all events populate the entire schema, or just parts of it?

- Might the topic contain duplicate events?

- Under what circumstances might we “lose” events?

- Am I interested in all the events on the topic or just a small proportion of them?

- Are there specific conventions (e.g. headers) that I need to know about?

- Is the data encrypted on the wire and at rest?

- Can I re-read events, and if so, is there a time limit after which they are archived?

- How soon after the event occurs will I receive it?

All of these could turn out to be critical to the final implementation, but we’re not going to find them out based on the schema alone. We can quickly see that for any significant solution there are a deeper set of characteristics that we need to know. A topic with the wrong characteristics has serious repercussions and could even render a project untenable. We need to know that sooner rather than later.

It’s not just about us humans

There is another motivation for more rigorously cataloguing event topics. It is becoming increasingly possible to ask Generative AI to design, and even implement solutions on our behalf. Were we to capture a more complete set of characteristics for exposed interfaces, GenAI might at a minimum be able to propose more robust designs, and maybe make some strides to generating the implementation too.

Exploring the topic characteristics

I’m still adding to, and changing the below list, but it’s reached a point of relative stability. What it needs now is broader input. That’s where you, the reader, can perhaps help.

The following characteristics are captured primarily from the consumers point of view. They are based on the question “what might you need to know in order to incorporate a given topic into a solution”. They won’t all be as important as one another, and some will be more important for certain types of solution.

Event Content

What is inside the events themselves including their payload and headers.

- Business scenario: A descriptive text noting how the events relate to the core business processes of the enterprise. This is the only non-technical characteristic and should enable anyone from the business to recognise when the event would be created, and roughly what the data within it would mean.

- Topic owner: Who, or what part of the business, should you contact for more information about the topic?

- Record completeness: Does the event data always represents a complete record within the source system from which it comes, or do you sometimes get partial records such as one only showing which fields have changed?

- Business contextualization: Do the events have enough information to describe a business event, or are they low level data events such as those often resulting from change data capture on highly normalised database tables? This might mean a consumer would need to retrieve additional data before being able to process the event meaningfully.

- Schema: Is the data in the events described by a formal schema, and can consumers rely on that schema being enforced for all messages

- Business data key: Is there a data field that uniquely identifies the instance of a business object that the event refers to, such as a customer reference number, or product identifier?

- Unique event identifier: Is there a field that uniquely identifies each event emitted from the source that could be used by the consumer to spot and filter duplicates?

- Event scope: Will the consumer be interested in all the events on the topic, or only a selection of them? For example, if a topic contains all sales globally, but the consumer only requires sales for a specific country.

- Headers and metadata: What data is carried/required over and above the core business schema? Mostly these will relate to security, but they might also be used to describe other aspects of the payload (a schema reference), or it’s encoding, or subtleties of the interaction pattern. Sometimes payload data is repeated in headers for example to save a consumer from parsing the payload just to route the event.

- Wire format: What data serialization is used to encode data in the payload of the event? e.g. JSON, Avro, Protobuf, XML

- Timestamp: Does the data contain a timestamp that represents the time that the event actually occurred in the source system or device? This can be important for understanding the true sequence of events, and also for understanding how current the data is.

- Data lineage and quality: This is a “catch all” for a suite of characteristics relating to where the data comes from. What systems/devices emitted the data? To what extent can it be trusted? Is it raw data or has it been interpreted in some way, and if so, do we have transparency into what algorithms were used? Who owns the data or is accountable for it?

Interaction pattern

The implications of the way you interact with the event implementation.

- Subscription: How do developers gain access to the events on the topic? Is there a self-service mechanism, or do they need to make a request? Do they need approval?

- Protocol: Over which protocols can you access the events on the topic? e.g. Kafka, AMQP, JMS, IBM MQ APIs, MQTT, web hooks, polling HTTP, web sockets.

- Event grouping: Do consumers typically process one event at a time, or is the default to receive the events in sets? Grouping can improve performance but can also dramatically affect aspects such as how error handling is performed.

- Consumer based selection: Does the event implementation provide a way to resolve event scope problems? For example, are consumers able to select to receive only subsets of the topic in their subscription or do they have to consume the whole topic?

- Transactional breadth: Can you combine multiple interactions as a single ACID transaction, potentially across topics, and perhaps even with separate resources such as databases?

- Receipt assurance: How confident can we be that we will receive all the messages from the producing system — no assurance at all, at most once, at least once, or exactly once.

Stream Content

How the events relate to one another within a stream.

- Duplicates: Might the same event be received twice by the consumer? These could for example be as a result of duplicates created at source, or the receipt assurance choices, or the available method of consumption.

- Concurrent consumption: Can topic processing be spread across multiple consumers to increase throughput? Are there any limits to the number of concurrent consumers?

- Sequencing and lateness: Will the consumer always receive the events in the same order as they were emitted by the source?

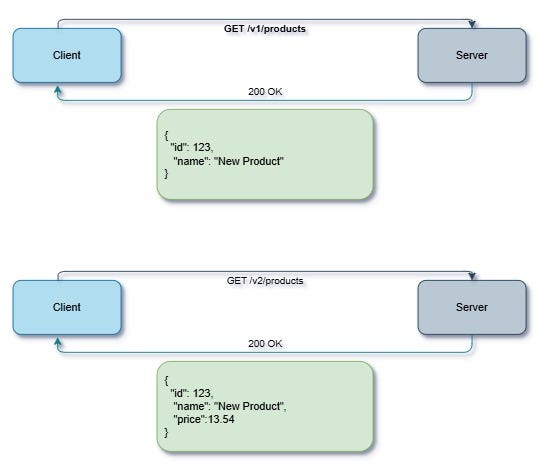

- Versioning strategy: Are changes to the schema guaranteed to be compatible with existing consumers. How will breaking changes be managed?

- Source replication completeness: If you read from the beginning of the stream, will you completely replicate the source system’s data? For example, would you be able to create a complete reference copy of the source based on the stream alone?

- Transactional read visibility: Is it possible for consumers to read events that have been published by a producer as part of a transaction but not yet committed? Is this choice configurable by the consumer, or fixed for the topic?

- State model: Do events on the stream represent changes in a state model such that only certain transitions are possible? The simplest example of this is where events are replicating changes in a database. There is an implicit state model for changes to database rows. They must begin with a “create”, which can be followed by any number of “updates”, or a “delete”, after which there can be no more “updates”. Knowing that a state model exists (and how tightly it is adhered to) can make it much easier for a consumer to process it.

- Durability: Are the events persisted to disk, or only held in the memory of the pub/sub implementation? Is there a possibility events could be lost during a single component failure?

- Archiving: Are the events in the stream archived, and if so, is it based on time, event count, bytes? Does it include strategies such as compaction (retaining the most recent record for each key)? Note that compaction may make it possible to retain source replication completeness even after archiving has taken place.

Non-functionals

Aspects related to performance and availability.

- Source to topic latency: What is the lag between the time the original event occurred, and the time it is made available for consumption. For example, following an event being emitted by a device, there could be various network latencies, and buffered transports before it is finally available to be picked up by consumers. Latency affects how “current” the consumer data can be and might also affect sequencing.

- Event size: Metrics relating to the typical size of messages on the topic, such as maximum, minimum, average and standard deviation. This will be important in estimating the memory and disk requirements of the consumer, and any target storage systems.

- Event rate: The number of events received over a period of time as a maximum and an average. This is important for estimating the CPU required to process the events. It can also be combined with event size to work out the downstream memory and storage requirements.

- Quotas: Are there any limits posed on how much can be drawn from a topic over a period of time. It might be that consumers are not allowed to draw down enough events at peak times to keep up with the stream. This would force a source to topic latency around those times. Are there different options for accessing the topic that have different quotas (perhaps at an extra cost) that therefore reduce/remove the likelihood of latency?

- Compression: Are the events on the topic compressed? This will reduce bandwidth requirements and affect the latency at the point of consumption. It may however come at the cost of additional CPU for the consumer. These aspects can be particularly important when receiving events over a variable network, onto a device with limited power (e.g. battery based). As such it may be important to know if compression is done by the producer and is therefore unavoidable, or just on the consuming side, and if so whether the consumer can configure whether to compress or not.

- Service availability: On failure, can you continue consuming at least some messages from the topic). This will likely be represented using the traditional measures for high availability such as “nines” (e.g. 3 nines means available 99.9% of the time).

- Data availability: On failure, how soon will I regain access to all messages on the topic, such that these can be aligned to business priorities of the solution. This is typically measured in terms of time to recovery of the full data set.

- Availability schedule: Are there planned outages at known times. Are these on a regular schedule, or do consumers receive advanced notifications of ad hoc outages.

- Replication spread: How many replicas of the events are held, how well are they distributed across nodes, availability zones and regions and where is the replication between them synchronous vs asynchronous. This provides an understanding of the risk of data loss in the event of failures at various scopes.

Security

How access to the events is controlled.

- Encryption: Is the data in the events encrypted? Is it encrypted on the wire (in the transmission protocol), and is it encrypted when it lands on disk? At which legs of the journey is it encrypted: publishing, internal replication, consumption? Is it just the payload that is encrypted, or the metadata in the headers also? Is the consumption encryption enforced, or a choice made by the consumer? How are the decryption keys/certificates made available? How are keys refreshed, and what does that mean for persisted data?

- Topic access: Is the topic accessible to anyone on the network, or only by specific groups/roles within the organisation, or does it require specific access to be set up by an administrator? Can consumers gain access using a self-service mechanism? Once access is requested, is there a manual approval required or is the access immediate?

- Event data access: Are certain elements of the schema unavailable to some audiences? For example, will some consumers find fields of sensitive information to be redacted? Is this dynamic based on the consumer (i.e. the data present might change if the credentials are changed) or are there different topics available to each role? Are there specific licensing conditions related to use or forward distribution of the data?

- Event instance access: Can consumers read/decrypt all events or only a selection of them pertinent to their role?

- Compliance: Has the topic been specifically engineered to comply with compliance regulations such as GDPR, PCI, FISMA, FIPS etc.

- Security model: What security mechanisms are available or enforced on the transmission protocol used for consumption for authentication and authorisation (e.g. TLS, LDAP, OAuth, SASL etc.).

If you’ve made it this far, well done, I have a request!

Please evaluate the list above in relation to your own projects and solutions. Is anything missing? Is anything unclear? Perhaps most critically, which characteristics are most important. Which made you think “oh yeah, I wish I’d known that before my last project!”. There are about 40 at the moment. They won’t all be important to all projects. I have already done some work on prioritising them, but further input would be greatly appreciated, so feel free to add comments.